Norman-ai

From a messy mobile app to a tool people actually use.

I was hired to bring the mobile app to the web. Instead of just resizing screens I saw a chance to simplify the experience. The result is a tool that turns complex tech into a product people enjoy using.

Responsibilities

UX Research

Product Design

Design system

Norman-ai

From a messy mobile app to a tool people actually use.

I was hired to bring the mobile app to the web. Instead of just resizing screens I saw a chance to simplify the experience. The result is a tool that turns complex tech into a product people enjoy using.

Norman-ai

From a messy mobile app to a tool people actually use.

I was hired to bring the mobile app to the web. Instead of just resizing screens I saw a chance to simplify the experience. The result is a tool that turns complex tech into a product people enjoy using.

Responsibilities

UX Research

Product Design

Design system

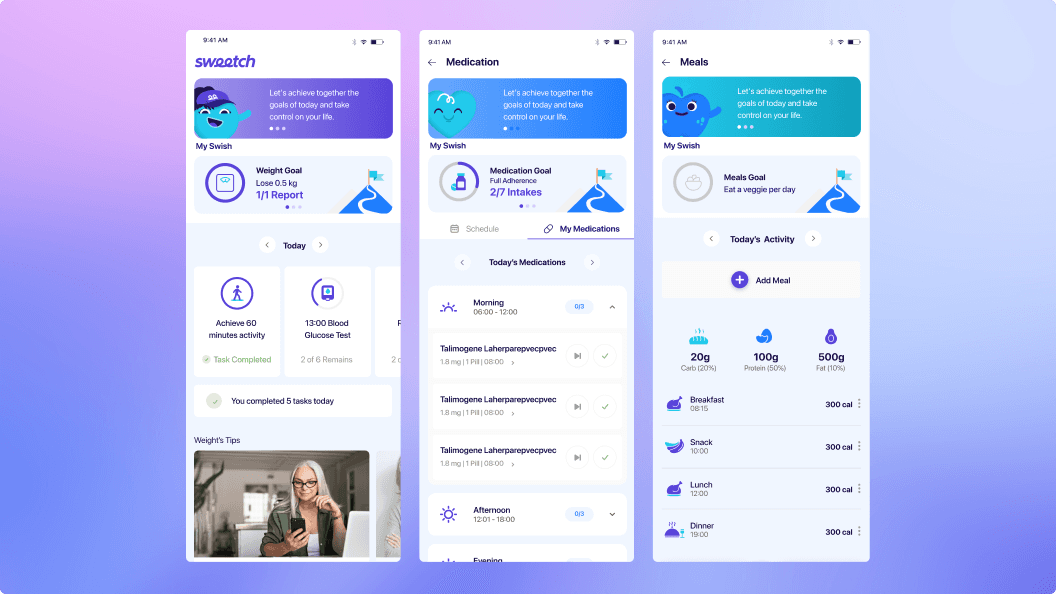

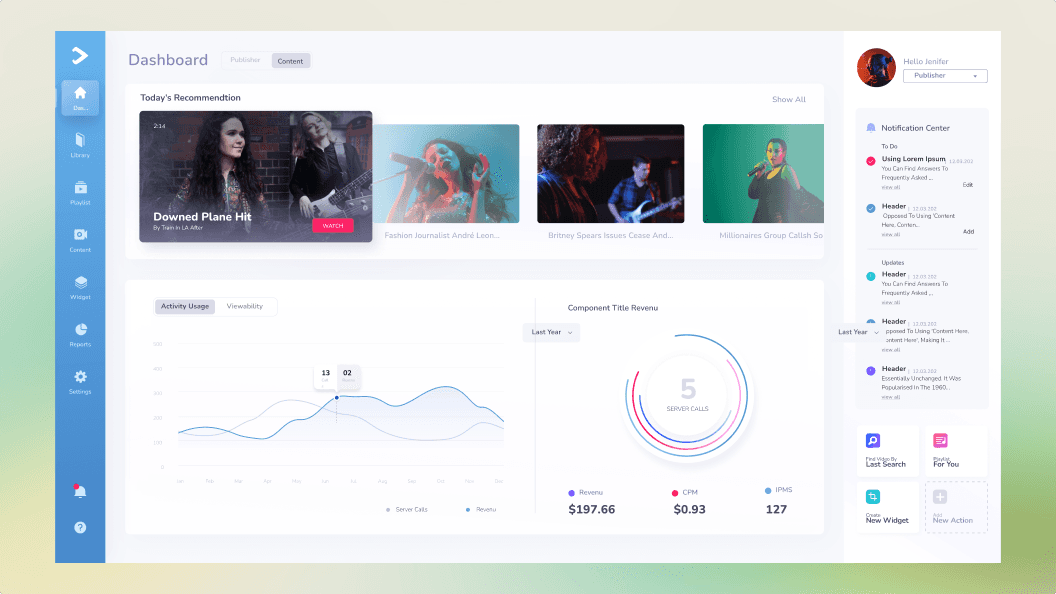

Unpacking the Mobile Experience

I analyzed the mobile app and found several points of friction. I felt that the desktop version required a more organized approach. To get it right, I mapped the current flow to identify the gaps:

Unpacking the Mobile Experience

I analyzed the mobile app and found several points of friction. I felt that the desktop version required a more organized approach. To get it right, I mapped the current flow to identify the gaps:

Unpacking the Mobile Experience

I analyzed the mobile app and found several points of friction. I felt that the desktop version required a more organized approach. To get it right, I mapped the current flow to identify the gaps:

Learning from the giants (and their mistakes)

I checked big players like Baseten and Together AI to see if they’d solved The struggle. Turns out, the market is full of 'smart' tools that are surprisingly hard to use.

Why build only for engineers?

Most competitors look like coding tools. This scared away non technical users immediately.

Do more options mean a better tool?

Having too many sliders and buttons without any guidance is confusing.

Limited "one-task" layouts

The flow was linear (Input → Output). If a user wanted to change one small thing, they had to start the whole process over.

Learning from the giants (and their mistakes)

I checked big players like Baseten and Together AI to see if they’d solved The struggle. Turns out, the market is full of 'smart' tools that are surprisingly hard to use.

Why build only for engineers?

Most competitors look like coding tools. This scared away non technical users immediately.

Do more options mean a better tool?

Having too many sliders and buttons without any guidance is confusing.

Limited "one-task" layouts

The flow was linear (Input → Output). If a user wanted to change one small thing, they had to start the whole process over.

The Solution

I focused on the biggest friction points I found during the audit.

Here are 5 key updates that made the workflow smoother and more intuitive:

Spotlight 01

A Guided Experience

Problem:

The old setup was confusing. Users didn't know which step was next or how much work was left.

Solution:

Stepper: Anchors users by clearly showing their progress and what’s left.

Smart Auto-fill: Automatically populates fields to prevent errors and speed up the workflow.

The Solution

I focused on the biggest friction points I found during the audit.

Here are 5 key updates that made the workflow smoother and more intuitive:

Spotlight 01

A Guided Experience

Problem:

The old setup was confusing. Users didn't know which step was next or how much work was left.

Solution:

Stepper: Anchors users by clearly showing their progress and what’s left.

Smart Auto-fill: Automatically populates fields to prevent errors and speed up the workflow.

Spotlight 02

Confidence Before Commitment

Problem:

In the mobile app, users couldn't see their final configuration until the very end.

Solution:

Unified Summary: Collects all choices in one view, so users don't have to rely on memory.

Live Preview: Shows the outcome before execution, giving users the confidence to finish.

Quick Edit: Allows for fast adjustments on the spot, without breaking the flow.

Spotlight 02

Confidence Before Commitment

Problem:

In the mobile app, users couldn't see their final configuration until the very end.

Solution:

Unified Summary: Collects all choices in one view, so users don't have to rely on memory.

Live Preview: Shows the outcome before execution, giving users the confidence to finish.

Quick Edit: Allows for fast adjustments on the spot, without breaking the flow.

Spotlight 03

Turning "Loading" into Discovery

Problem:

Users were trapped on a static screen with no progress updates and no way to exit until the process finished.

Solution:

Live Progress: Shows exactly what is happening, step by step.

Background Mode: Allows users to leave the screen and keep working while the model runs.

Instant Access: Users can click on generated results to enter their workflow and run new models immediately.

Spotlight 03

Turning "Loading" into Discovery

Problem:

Users were trapped on a static screen with no progress updates and no way to exit until the process finished.

Solution:

Live Progress: Shows exactly what is happening, step by step.

Background Mode: Allows users to leave the screen and keep working while the model runs.

Instant Access: Users can click on generated results to enter their workflow and run new models immediately.

Spotlight 04

Breaking the Linear Chain

Problem:

Most tools are strictly linear. If you want to try different models or turn an image into a video, you have to switch screens and start over.

Solution:

One Input, Multiple Outputs: Users can connect a single prompt to several models at once.

Seamless Chaining: Create complex flows

(like Text → Image → Video) without leaving the screen.

Visual Traceability: Clear connection lines map the flow, so users can instantly see where each result came from and never feel lost.

Spotlight 04

Breaking the Linear Chain

Problem:

Most tools are strictly linear. If you want to try different models or turn an image into a video, you have to switch screens and start over.

Solution:

One Input, Multiple Outputs: Users can connect a single prompt to several models at once.

Seamless Chaining: Create complex flows

(like Text → Image → Video) without leaving the screen.

Visual Traceability: Clear connection lines map the flow, so users can instantly see where each result came from and never feel lost.

Spotlight 05

Seamless Discovery

Problem:

Testing a different model usually forces users to stop and reset. This interrupts the workflow and stops users from experimenting.

Solution:

Inline Switcher: Users can swap models directly on the canvas without deleting their work.

Context Preserved: The prompt and connections stay active, allowing for continuous iteration.

Visual Sidebar: A dedicated left-side panel lets users browse 50+ models instantly, without hiding the canvas.

Smart Tooltips: Hovering over a model explains exactly what it does, so users don't have to guess before clicking.

The Vision - Content First Logic

Users shouldn't need to choose the right model. The system will analyze the uploaded assets to automatically select the best tool. For example: Uploading a cartoon image instantly routes the task to the animation engine. The content dictates the tool.

Spotlight 05

Seamless Discovery

Problem:

Testing a different model usually forces users to stop and reset. This interrupts the workflow and stops users from experimenting.

Solution:

Inline Switcher: Users can swap models directly on the canvas without deleting their work.

Context Preserved: The prompt and connections stay active, allowing for continuous iteration.

Visual Sidebar: A dedicated left-side panel lets users browse 50+ models instantly, without hiding the canvas.

Smart Tooltips: Hovering over a model explains exactly what it does, so users don't have to guess before clicking.

The Vision - Content First Logic

Users shouldn't need to choose the right model. The system will analyze the uploaded assets to automatically select the best tool. For example: Uploading a cartoon image instantly routes the task to the animation engine. The content dictates the tool.

Outcomes & Impact

Strategic Impact

The new design turned complex technology into a real product. This gave investors the confidence they needed to support our next funding round.

Scalable Impact

This design became the foundation for our entire ecosystem. We are now updating every part of the company, including the mobile app and website, to follow this new system.

Personal Growth

As this was my first job as a sole designer, I am especially proud of the result. I proved to myself that I can take full ownership and lead a complex project from the first idea to the final design.

Outcomes & Impact

Strategic Impact

The new design turned complex technology into a real product. This gave investors the confidence they needed to support our next funding round.

Scalable Impact

This design became the foundation for our entire ecosystem. We are now updating every part of the company, including the mobile app and website, to follow this new system.

Personal Growth

As this was my first job as a sole designer, I am especially proud of the result. I proved to myself that I can take full ownership and lead a complex project from the first idea to the final design.